• Privileged instructions can be issued only in kernel mode.

• Must ensure that a user program could never gain control of the computer in kernel mode. Otherwise, undesirable actions can be done e.g. a user program that, as part of its execution, stores a new address in the interrupt vector.

<--MEMORY PROTECTION-->

• Must provide memory protection at least for the interrupt vectorand the interrupt service routines.

• In order to have memory protection, add two registers that determine the range of legal addresses a program may access:

– Base Register – holds the smallest legal physical memory address.

– Limit Register – contains the size of the range

• Memory outside the defined range is protected.

<--CPU PROTECTION-->

• Timer

– interrupts computer after specified period to ensure operating system maintains control.

– Timer is decremented every clock tick.

– When timer reaches the value 0, an interrupt occurs.

• Timer commonly used to implement time sharing.

• Time also used to compute the current time.

• Load-timer is a privileged instruction.

• Storage systems organized in hierarchy.

– Speed

– Cost

– Size

– Volatility

<--STORAGE HEIRARCHY(MEMORY HEIRARCHY)-->

Click here to see full image size

• Caching – copying information into faster storage system; main memory can be viewed as a cache for secondary storage.

–improve performance where a large access-time or transfer-rate disparity exists between two components) Memory caching: add cache (faster and smaller memory) between CPU and main memory

--> When need some data, check if it’s in cache

-->If yes, use the data from cache

--> If not, use data from main memory and put a copy in cache

– Disk caching: main memory can be viewed as a cache for disks

- Each CPU has a local cache

- A copy of X may exist in several caches --> must make sure that an update of X in one cache is immediately reflected in all other caches where X resides

- Hardware problem

• Cache consistency in distributed systems

- A master copy of the file resides at the server machine

- Copies of the same file scattered in caches of different client machines

- Must keep the cached copies consistent with the master file

- OS problem

• Secondary Storage – extension of main memory that provides large nonvolatile storage capacity.

• Magnetic Disks

– rigid metal or glass platters covered with magnetic recording material.

– Disk surface is logically divided into tracks, which are subdivided into sectors.

– The disk controller determines the logical interaction between the device and the computer.

• Magnetic Tapes

Magnetic tape is a medium for magnetic recording generally consisting of a thin magnetizable coating on a long and narrow strip of plastic. Nearly all recording tape is of this type, whether used for recording audio or video or for computer data storage. It was originally developed in Germany, based on the concept of magnetic wire recording. Devices that record and playback audio and video using magnetic tape are generally called tape recorders and video tape recorders respectively. A device that stores computer data on magnetic tape can be called a tape drive, a tape unit, or a streamer.

Magnetic tape revolutionized the broadcast and recording industries. In an age when all radio (and later television) was live, it allowed programming to be prerecorded. In a time when gramophone records were recorded in one take, it allowed recordings to be created in multiple stages and easily mixed and edited with a minimal loss in quality between generations. It is also one of the key enabling technologies in the development of modern computers. Magnetic tape allowed massive amounts of data to be stored in computers for long periods of time and rapidly accessed when needed.

Today, many other technologies exist that can perform the functions of magnetic tape. In many cases these technologies are replacing tape. Despite this, innovation in the technology continues and tape is still widely used.

Device-status table contains entry for each I/O deviceindicating its type, address, and state.

- Interrupts and Traps. A great deal of the kernel consists of code that is invoked as the result of a interrupt or a trap.

- While the words "interrupt" and "trap" are often used interchangeably in the context of operating systems, there is a distinct difference between the two.

- An interrupt is a CPU event that is triggered by some external device.

- A trap is a CPU event that is triggered by a program. Traps are sometimes called software interrupts. They can be deliberately triggered by a special instruction, or they may be triggered by an illegal instruction or an attempt to access a restricted resource.

When an interrupt is triggered by an external device the hardware will save the the status of the currently executing process, switch to kernel mode, and enter a routine in the kernel. - This routine is a first level interrupt handler. It can either service the interrupt itself or wake up a process that has been waiting for the interrupt to occur.

When the handler finishes it usually causes the CPU to resume the processes that was interrupted. However, the operating system may schedule another process instead.

When an executing process requests a service from the kernel using a trap the process status information saved, the CPU is placed in kernel mode, and control passes to code in the kernel. - This kernel code is called the system service dispatcher. It examines parameters set before the trap was triggered, often information in specific CPU registers, to determine what action is required. Control then passes to the code that performs the desired action.

When the service is finished, control is returned to either the process that triggered the trap or some other process. - Traps can also be triggered by a fault. In this case the usual action is to terminate the offending process. It is possible on some systems for applications to register handlers that will be evoked when certain conditions occur -- such as a division by zero.

Most computer systems can only execute code found in the memory (ROM or RAM); modern operating systems are mostly stored on hard disk drives, LiveCDs and USB flash drive. Just after a computer has been turned on, it doesn't have an operating system in memory. The computer's hardware alone cannot perform complicated actions of the operating system, such as loading a program from disk on its own; so a seemingly irresolvable paradox is created: to load the operating system into memory, one appears to need to have an operating system already installed.

- In computing, system for processing data with little or no operator intervention. Batches of data are prepared in advance to be processed during regular ‘runs’ (for example, each night). This allows efficient use of the computer and is well suited to applications of a repetitive nature, such bulk file format conversion, as a company payroll, or the production of utility bills.

MULTIPROGRAMMED SYSTEM

- It's an OS which keeps several jobs(programs) in memory at a time .The operating system picks and begins to execute one of the jobs in the memory. Eventually the job may have to wait for some task like a tape to be mounted,... Or an input output operation to be complete.In a multiprogrammed OS the OS is not idle it's simply switch to another job and executes it .As there is always some jobs to execute , the CPU will never be idle.

- Time-sharing is sharing a computing resource among many users by multitasking. Its introduction in the 1960s, and emergence as the prominent model of computing in the 1970s, represents a major historical shift in the history of computing. By allowing a large number of users to interact simultaneously on a single computer, time-sharing dramatically lowered the cost of providing computing, while at the same time making the computing experience much more interactive.

· TIME-SHARING

-> The first involved timesharing or timeslicing. The idea of multiprogramming was extended to allow for multiple terminals to be connected to the computer, with each in-use terminal being associated with one or more jobs on the computer. The operating system is responsible for switching between the jobs, now often called processes, in such a way that favored user interaction. If the context-switches occurred quickly enough, the user had the impression that he or she had direct access to the computer.

· REAL TIME

-> A real-time system is used when rigid time requirements have been placed on the operation of a processor or the flow of data thus it is often used as a control device in a dedicated application. Sensors bring data to the computer. A real-time system has well-defined, fixed time constraints. Processing must be done within the defined constraints, or the system will fail.

· NETWORK

-> Networked systems consist of multiple computers that are networked together, usually with a common operating system and shared resources. Users, however, are aware of the different computers that make up the system.

· DISTRIBUTED

-> A network, in the simplest terms, is a communication path between two or more systems. Distributed systems depend on networking for their functionality.

1. Client-Server Systems

2. Peer-to-Peer Systems

also consist of multiple computers but differ from networked systems in that the multiple computers are transparent to the user. Often there are redundant resources and a sharing of the workload among the different computers, but this is all transparent to the user.

· HANDHELD

-> Handheld systems include personal digital assistants (PDAs) or cellular telephones with connectivity to a network such as the Internet.

->A stand-alone PC works on its own. While in a workstation connected to a network, you can freely share your files and databases to other PC.A desktop or laptop computer that is used on its own without requiring a connection to a local area network (LAN) or wide area network (WAN). Although it may be connected to a network, it is still a stand-alone PC as long as the network connection is not mandatory for its general use.In offices throughout the 1990s, millions of stand-alone PCs were hooked up to the local network for file sharing and mainframe access. Today, computers are commonly networked in the home so that family members can share an Internet connection as well as printers, scanners and other peripherals. When the computer is running local applications without Internet access, the machine is technically a stand-alone PC. A workstation is a high-end microcomputer designed for technical or scientific applications. Intended primarily to be used by one person at a time, they are commonly connected to a local area network and run multiuser operating system. The term workstation has also been used to refer to a mainframe computer terminal or a PC connected to a network.

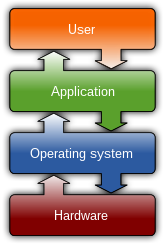

USER VIEW

The user view of the computer varies by the interface being used. Most computer users sit in front of a PC, consisting of a monitor, keyboard, mouse and system unit. Such a system is designed for one user to monopolize its resources, to maximize the work that the user is performing. In this case,the operating system is designed mostly for ease of use, with some attention paid to performance, and none paid to resource utilization.

Some users sit at a terminal connected to a mainframe or minicomputer. Other users are accessing the same computer through other terminals. These users share resources and may exchange information. The operating system is designed to maximize resource utilization.

Other users sit at workstations, connected to networks of other workstations and servers. These users have dedicated resources at their disposal, but they also share resources such as networking and servers.

Recently, many varieties of handheld computers have come into fashion. These devices are mostly standalone, used singly by individual users. Some are connected to networks, either directly by wire or through wireless modems. Due to power and interface limitations they perform relatively few remote operations. These operating systems are designed mostly for individual usability, but performance per amount of battery life is important as well.

Some computers have little or no user view. For example, embedded computers in home devices and automobiles may have numeric keypad, and may turn indicator lights on or off to show status, but mostly they and their operating systems are designed to run without user intervention.

SYSTEM VIEW

We can view an operating system as a resource allocator. A computer system has many resources - hardware and software - that may be required to solve a problem. The operating system acts as the manager of these resources.

An operating system can also be viewed as a control program that manages the execution of user programs to prevent errors and improper use of the computer. It is especially concerned with the operation and control of I/O devices.

We have no universally accepted definition of what is part of the operating system. A simple viewpoint is that it includes everything a vendor ships when you order “the operating system”.

A more common definition is that the operating system is the one program running at all times on the computer (usually called the kernel), with all else being application programs. This is the one that we generally follow.

A peer-to-peer based network:

SMP systems allow any processor to work on any task no matter where the data for that task are located in memory; with proper operating system support, SMP systems can easily move tasks between processors to balance the workload efficiently.

Where as a symmetric multiprocessor or SMP treats all of the processing elements in the system identically, an ASMP system assigns certain tasks only to certain processors. In particular, only one processor may be responsible for fielding all of the interrupts in the system or perhaps even performing all of the I/O in the system. This makes the design of the I/O system much simpler, although it tends to limit the ultimate performance of the system. Graphics cards, physics cards and cryptographic accelerators which are subordinate to a CPU in modern computers can be considered a form of asymmetric multiprocessing.[citation needed] SMP is extremely common in the modern computing world, when people refer to "multi core" or "multi processing" they are most commonly referring to SMP.

GOALS OF THE OPERATING SYSTEM: